Hello Fraction,

Thank you for your detailed and insightful response.

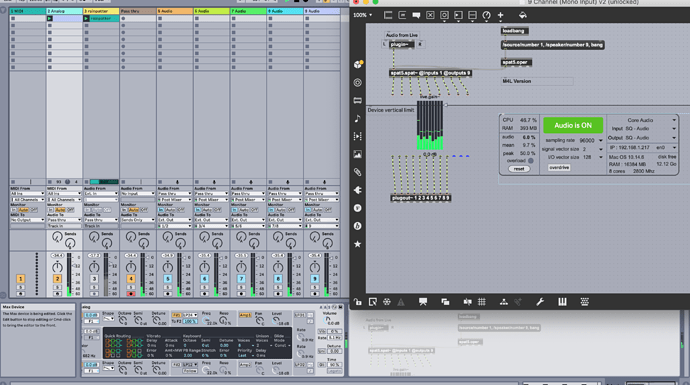

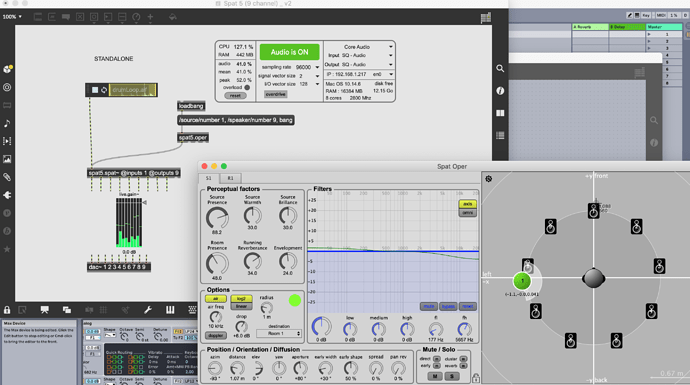

Your reasons are very much in line with my hopes for a ‘technician’s answer’ to an amateur’s wishes. I recently spent $200 on a programmer recommended by Cycling74 onlyto come up with a barely useable version of SPAT in M4L. His

explanation was difficult to follow and to recreate.

As with many M4L patches, Live users either:

do not know what Max components are needed to achieve their

goals and do not know how those components need to be configured or

lack the motivation to research how to achieve their goals and

simply are waiting for someone to do the work for them

I am in the middle of these two.

My goal is to have configurations of SPAT in M4L that can address my

changing needs. For instance, I am working with an improvising

violinist who wants to have her playing spatialized for a live radio

performance (therefore it would be a ‘virtual spatialisation’ for stereo reproduction).

Myself, I use CataRT and OMax that I route through Live so that I can apply various effects to their outputs. I would like to be able to spatialise their outputs in my own 4-speaker

and 8-speaker systems.

As projects evolve, I discover that my needs for having SPAT configured

for use in Ableton keep evolving with my changing needs. The SPAT

tutorial video is too small on my 15" screen to see the values

being entered. They are unreadable, so the tutorial’s information, for

me, is unusable. I wrote to him with questions but did not receive a

reply.

Fraction, it seems you both understand what I and other Live users might

be looking for and you have the technical expertise to create the needed

kind of M4L patches for SPAT’s integration with Live.

I believe Greg Beller would be an excellent advocate for any ‘alpha’ or beta

testing that will be necessary for finding the =‘sweet spot’ for the M4L .amxd patches you might find the time and energy to create.

You will have very grateful users thanking you.

Best wishes,

Glen

?

?