Hey everyone !

My name is Vincent Cusson, I’m an audio technologist interested in interactive music and instrument design.

We’ve had the chance to experiment with one of the first version of the tool since 2022.

It all started in preparation of Tommy Davis’ doctoral recital, focusing on human-machine improvisations.

I remember being intimidated at the time by all the settings and options available. We had to find a balance between aiming for a throughout comprehension of the system and keeping our focus on the resulting artistic outcome. The concert went well (in the sense of “without crash”) but we felt we hadn’t even touched the surface of what a tool like this could offer.

We then decided to work on our own performance setup including the Dicy2 library. The project, called eTu{d,b}e, simultaneously refers to the name of the eTube instrument and to a series of improvised études based on human-computer musical interactions.

The latest version of the eTube controller

The intent behind the development of this augmented instrument was to allow the musician to communicate with the agents during a performance. In addition to the corpus creation and curation, we identified various parameters in the patch which seemed to afford certain kinds of interaction in real time.

An eTu{d,b}e performance presented at NIME in 2022

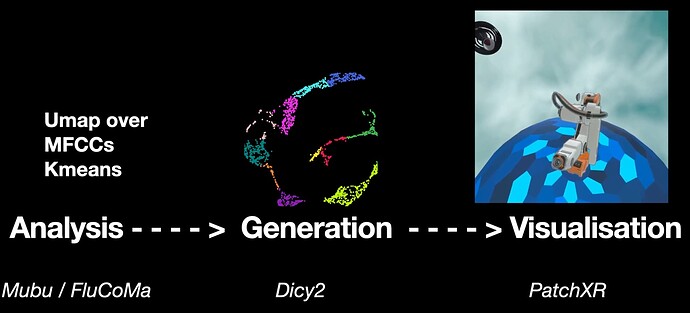

In the following year, Kasey Pocius joined the team to work on interactive spatialization models for the agents. A lot of work was done to update the library and implement multiple cool features. In the latest iteration, we have combined Dicy2 with other improvising frameworks to extend our research on musical agents.

This new setup can be seen in action in this video :

Our work with these tools also led to some publications in the past 2 years.

Looking back, it is interesting to observe correlation between our understanding of these systems and the artistic output over time. We will try to update this thread with upcoming work and are looking forward to see how you are using Dicy2 and exchange on the subject.

Thanks to @jnika for their advice and support !

![]()

![]() This new thread aims to list resources (dates, shows, videos, articles, medias, etc.) related to creative projects using Dicy2 for Max or Dicy2 for Live by Forum members.

This new thread aims to list resources (dates, shows, videos, articles, medias, etc.) related to creative projects using Dicy2 for Max or Dicy2 for Live by Forum members.![]()

![]() Another thread dedicated to Ircam projects using Dicy2 is available here.

Another thread dedicated to Ircam projects using Dicy2 is available here.![]()

![]() To share techical issues (detailed setups, feedback, suggestions, bug reports, feature requests, etc.) please use Dicy2 for Max Forum discussion or Dicy2 for Live Forum discussion.

To share techical issues (detailed setups, feedback, suggestions, bug reports, feature requests, etc.) please use Dicy2 for Max Forum discussion or Dicy2 for Live Forum discussion.![]()

![]() Feedback is very important to us! And sharing your setups and projects will make it possible to inspire / be inspired by other projects!

Feedback is very important to us! And sharing your setups and projects will make it possible to inspire / be inspired by other projects!

![eTu{d,b}e (fall 2022) [excerpt]](https://img.youtube.com/vi/n97wToOFiJo/hqdefault.jpg)