Hi Thibaut,

I have been browsing several threads as well as Spat tutorials about HOA and binaural, but I’m still wondering about what is the most efficient strategy in terms of CPU economy as well as creativity. I need to spatialize a scene with many sources, as well as a Bformat audio stream of 4 channels (a room ambiance that I recorded with a tetramic and that won’t be require any processing.

-

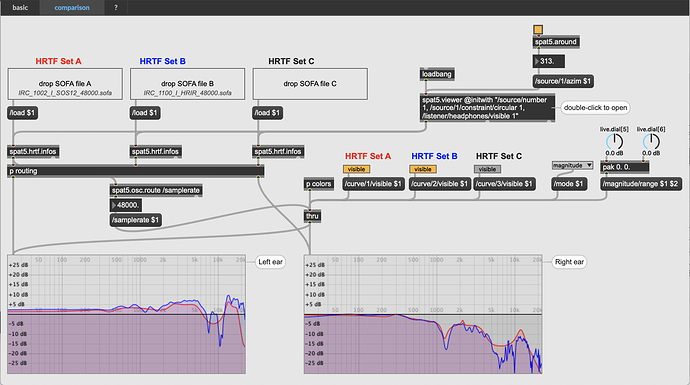

It seems that using a combination of HOA with binaural is more CPU efficient when it comes to spatialize multiple sources. I have tried to compare the three options (spat5.hoa.binaural vs. spat5.virtualspeakers vs. spat5.binaural) in the spat.5.hoa.binaural~ example of the overview, but I don’t see an obvious difference.

What would you suggest? Is spat5.hoa.binaural reliable? I read in one of the tutorials “use at your own risk”… -

I have so far used spat5.spat with the binaural panning type. This solution was OK since I didn’t have many sources, but I now think I should apply the HOA-binaural combination. I will have at least 15 sources, including a BFormat 4 channel audio stream. Is this combination actually better in this case?

So far, I used spat5.spat , along with spat5.oper to manage the positioning and perceptual parameters of the sources in binaural.

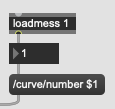

If I were to use a combination such as spat.hoa.encoder / spat5.hoa.binaural or spat5.virtual speakers, how can I preserve the positioning and perceptual parameters control (without spat5.spat) ? Should spat5.spat intervene in the processing chain, or how can it be replaced?

I believe I could use the approach of nm0 here Ambisonic Synthesis + Decoding in spat5.spat

and place a spat5.spat object upstream, before the spat5.decoder?

There are many tutos, examples involved. Let me know if I should provide a patch. I’d rather understand the underlying logic before hand.

Thanks in advance!

Coralie