Hello!

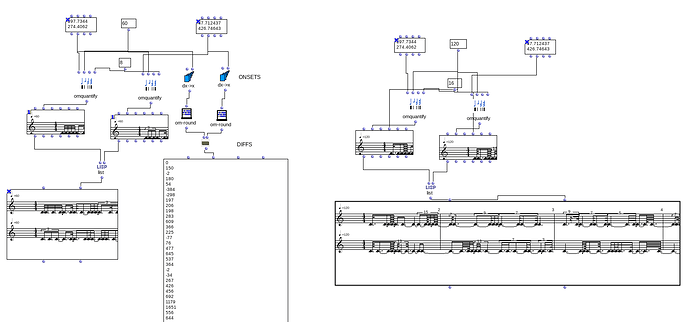

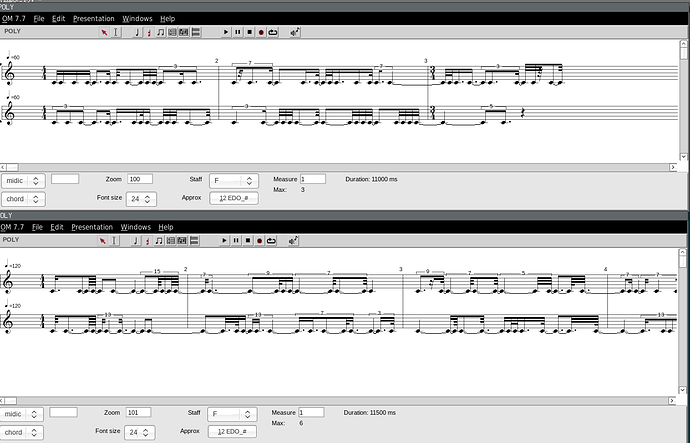

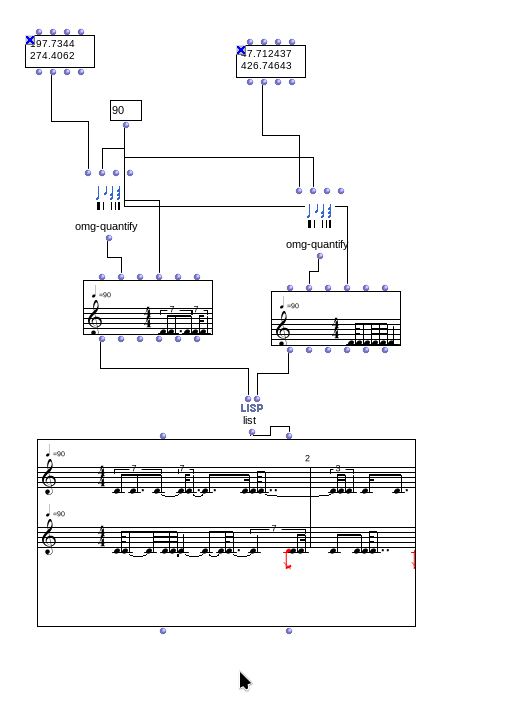

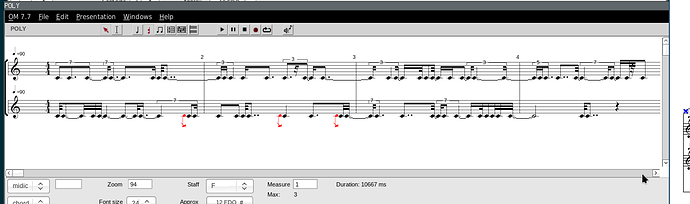

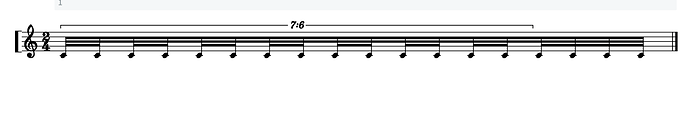

I am looking for ways to perform polyphonic rhythm quantification on nested lists of durations (in milliseconds).

What I would like is a quantification process that takes into account how much the rhythmic layers overlap. During quantification, the layers would be slightly adjusted so that they produce no shared attacks, or at least as few coinciding attacks as possible. Of course, with many layers and depending on the rhythmic structure, a perfect result may not always be achievable, but the goal is to minimize simultaneities where possible and keep the rhythm accurate.

An easy way would be to give just one rhythmic layer different units, such as 1/20 and 1/16, but this does not seem ideal to me. Any suggestions are welcome.

Thank you!