I looked into some details of onseg:

First, it is astonishing that it works so well, even without using the abs delta. You can actually compare this with onseg.odfmode square or rms which use the square difference to the median.

Second, the sum hides a lot of information from onseg. Without it, each band’s difference to its median is taken and then summed (onseg.numcols starting at onseg.colindex).

More to come…

Dear Diemo — Of course, it is fine with me to use me patch as a starting point.

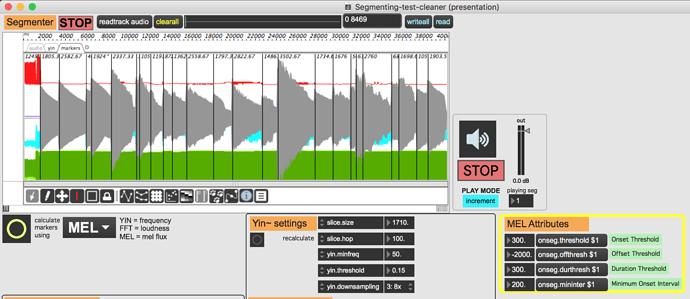

Here is the .mubu and a screenshot of adjusted Mel parameters for the Rhodes example.

Rhodes-Mel-segmentation.mubu (1.0 MB)

Regarding yin segmentation: Actually I am surprised that the flute example works so well because I thought that onseg was only looking for upward changes when pointed to the frequency column (and thought I had verified that!).

Can I propose a tougher example? I have trouble with this one and would be curious to know if either of you have suggestions or success:

Hi Chris,

That last one was tough! I didn’t get satisfactory results with any of the detectors.

Right now, I’m on the verge of going full circle and concluding that, for all its flaws, the standard FFT detector is the best compromise when dealing with large amounts of audio. Because the yin and mel based detectors are more specialised for dealing with the type of onsets which FFT can’t handle.

Wouldn’t it be great to have a machine learning algorithm that could decide which algorithms to use based on categories of input? Now that’s a project…

Thanks for having a look. Yes, this is where it gets complicated… Maybe another option, more within reach than machine learning, would be some system that performs different types of analyses and compares results - confirming clear onsets when data points coincide or rejecting those that seem not to work.

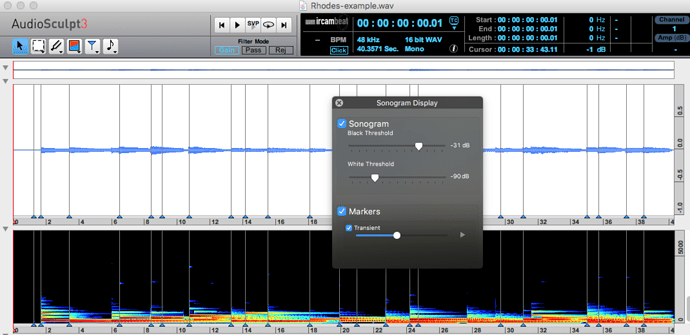

For me, the ideal would be to somehow replicate the slider in AudioSculpt:

Of course this is kind of equivalent to changing the threshold for onseg segmentation, but the dream would be a slider that allows one to move between combined (weighted!?) data from FFT / YIN / and spectral differencing analyses…

Yep, that’s called fusion. Would it be a workable first step to combine all of the segmentations into one stream? In your experience, does yinseg catch stuff which loudness doesn’t, or would it produce too many false positives for too many sound classes?

Based on my limited experience, yin tends to capture less overall than loudness, but sometimes it captures continuous pitch changes which loudness ignores.

The mel detection algorithm, on the other hand, as per now, is more tricky to control. There is a very fine line (dependent on threshold) between capturing many false positives and nothing at all.

Exactly: yinseg can catch slurred or continuous notes better than loudness detection. But yinseg is not useful if there is contrapuntal motion, for instance, but mel can catch changes in individual voices.

If we can get all segmentations into one stream, that would be helpful, but weeding out the false positives will be tricky.

One idea might be to create a series of defined presets: “midrange percussive,” “treble legato,” “contraupuntal” that would give different weights to the various detection algorithms.

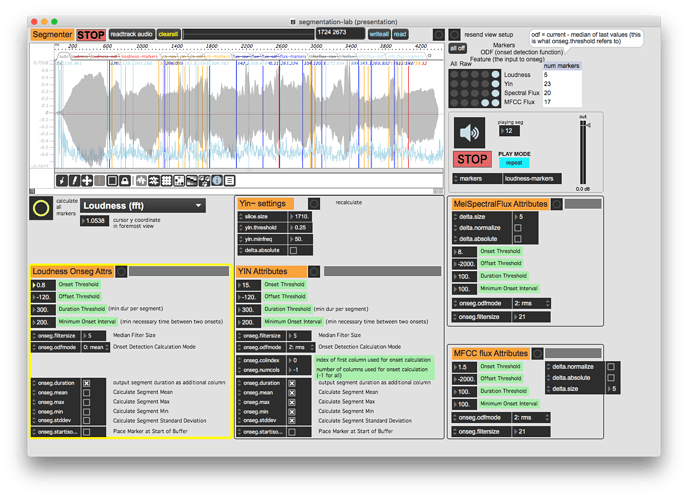

So here is a first version of the Segmentation Laboratory:

Here you can run 4 segmenters, and look at the data in various processing steps. It should use only features in v1.9.14, but we found that the editor is kind of stubborn, so you’ll have to resend the view setup quite often.

segmentation-lab.maxpat (244.0 KB)

attrchanged.js (1.1 KB) [EDIT: this file doesn’t seem to be downloadable as .js, here is its source:]

// attrchanged.js: listen to change in obj of given list of attrs

function valuechanged (data)

{

if (data.attrname)

{

outlet(0, data.attrname, data.value);

}

}

function loadbang ()

{

this.bang();

}

function bang ()

{

if (jsarguments.length < 2)

error("first argument must be scripting name of listened to object");

var ob = this.patcher.getnamed(jsarguments[1]); // get the object in 1st arg

var obattrs = ob.getattrnames();

var listento = [];

var listeners = [];

if (jsarguments.length == 2)

listento = obattrs; // no attr arg: listen to all

else

listento = jsarguments.slice(2);

for (var i = 0; i < listento.length; i++)

{

if (obattrs.indexOf(listento[i]) >= 0)

listeners[i] = new MaxobjListener(ob, listento[i], valuechanged);

else

error(ob.maxclass +" object "+ jsarguments[1] +" does not have attribute "+ listento[i]);

}

gc();

}

Have fun!

…Diemo

Very nice work! This should be fun

Thanks, Diemo! Strangely I can’t download "attrchanged.js"from the forum site; I get this odd error message:

Perhaps you could upload a .zip (or email me an attachement)? Thanks and more soon

Here’s a new version of segmentation-lab with more stats and hz to MIDI scaling of yin before the delta: seglab v2.zip (28.4 KB)

Next version will use new features and fixes of mubu 1.9.15.

Thanks, Diemo. I am finding myself with less time than I’d hoped to dig into this right away, but I am very much looking forward to it — C

Hi guys, why not using a project at this point ?

You can git it and do versionning…

Let me know if I can help

By popular demand, this patch is now the seed of a Forum project:

https://forum.ircam.fr/projects/detail/segmentation-lab/

Please use all gitlab and forum niceties (issues, pull requests, discussion forums) to collaborate!