Hello,

Working on Antescofo Max tutorials (v. 1.0-410) on Mac (OS 12.6), another big inconsistency is encountered.

@local attribute (tutorial 14 and following) behaves nothing as expected.

1.) first problem is already without @local. Playing the first example in the tutorial with a random second note, Antescofo will perform the action associated with the second note, just like if a D4 had been played. This does not coincide with the described and expected behaviour. Is Antescofo behaving incorrectly or is the description in the tutorial not so clear?

The “normal” behaviour (i.e. waiting for the last, correct note to be played and only then perform actions associated with previous events) is achieved only by playing both first notes incorrectly!

The same happens with the second score example.

2.) with the @local attribute, the score syntax of the example is probably incorrect or at least inconsistent with what was previously stated (i.e. the delays make actions happen after the onset of subsequent events). This generates a erratic behaviour of @local; it is sometimes calculated correctly (@local actions are not performed if their parent events are skipped) and sometimes not (@local actions are performed anyway even if the event is skipped, as if @local was not there).

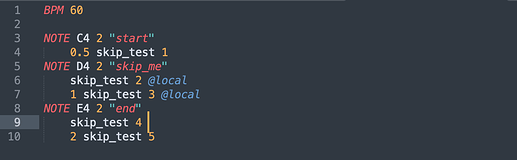

In the specific case, I observe that @local actions are always interpreted correctly when their delay falls “inside” the duration of their “parent” event. See example:

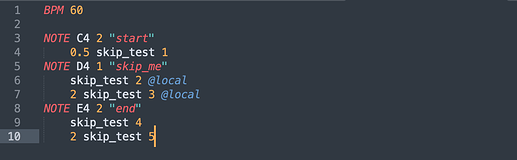

In the case of a score similar to that originally provided (example 2), the second @local action is performed nevertheless (i.e. in the case of a wrong second note “2 skip_test 3 @local” is performed immediately before “skip_test 4” when the NOTE E4 is played).

My understanding is that this happens because “2 skip_test 3 @local” is positioned after note E4 on the timeline because its delay exceeds the duration if his “parent” note D4, so Antescofo still performs this action because of how it interprets the score.

This seems logical, but is in complete contradiction with what is written in the tutorial’s text… What is the correct behaviour?

A similar inconsistent behaviour is encountered in tutorial 18 when @local is assigned to group actions; here too @local is correctly interpreted only if both first notes are wrong, otherwise actions happen at their scheduled beat positions. Moreover, in this tutorial both good and bad examples work exactly the same (i.e. more or less correctly?)!!

After these tutorials one is left with many many questions and confusion… It’s not encouraging, if these simple elements already expose such inconsistent behaviour, what will happen with more complex structures?