Hi Benjamin, I hope you are well.

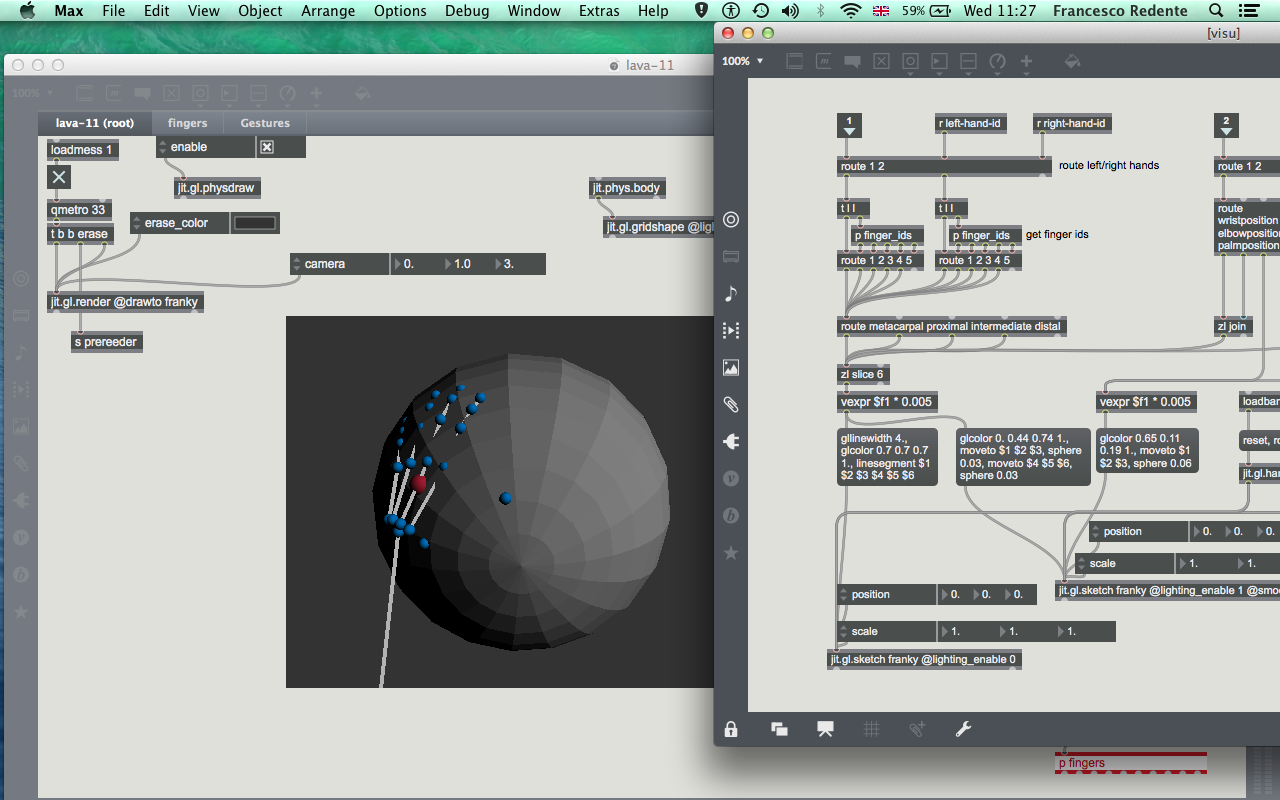

I have designed physical hands (using a leapmotion) to interact with object with in a world.

Everything works great… I have made 6 jit.pshys which represent my palm position and tip positions. Now I can grab / move things and do other world’s interactions.

I have a quick question: I am using Graham’s Oculus patch (working fine btw) for the Rift.

GitHub - grrrwaaa/max_oculus: Max/MSP/Jitter external & example for the Oculus Rift

The main problem I have right now is that when I move-navigate around… my hands (visualization of the leapmotion) are not following the head tracking position, so they remain in the same position when I navigate within the world.

I know I can get the head-position (quat / position ) from Graham Oculus object, but I am not sure how-where to send those values so that the hands are following links to the character position (navigation-head position).

Please let me know if anyone can help.

Thank you so much.